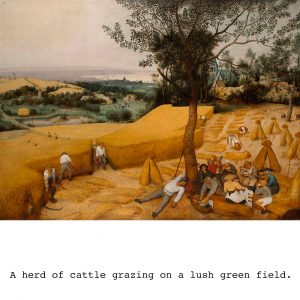

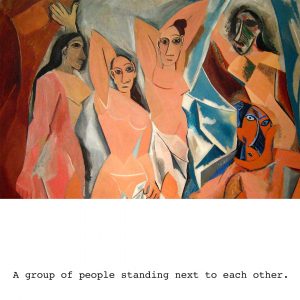

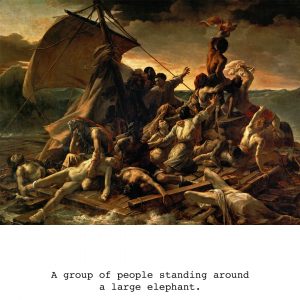

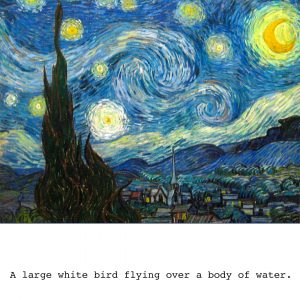

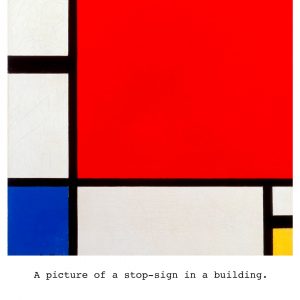

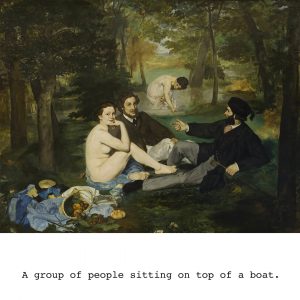

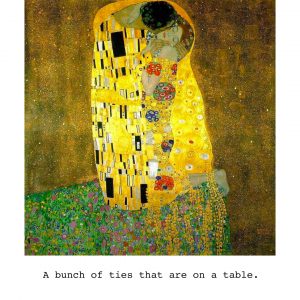

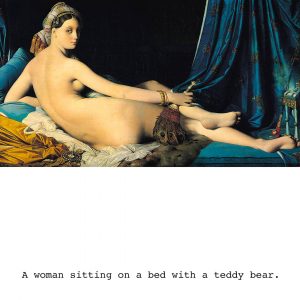

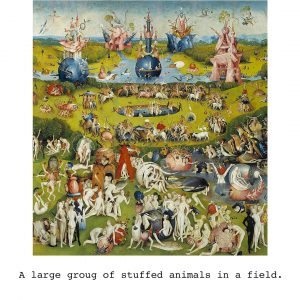

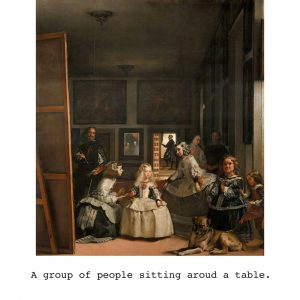

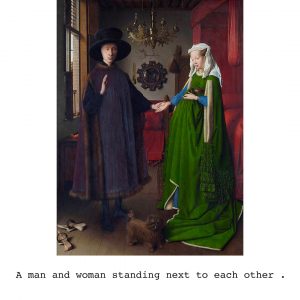

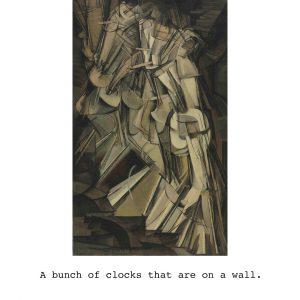

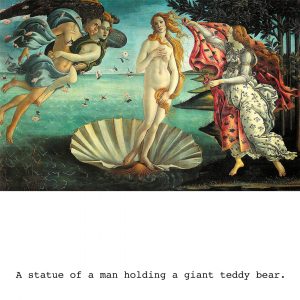

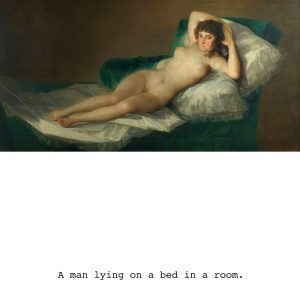

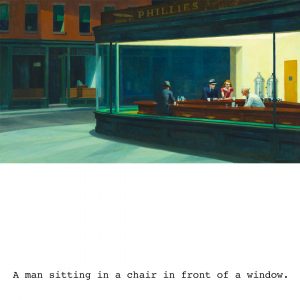

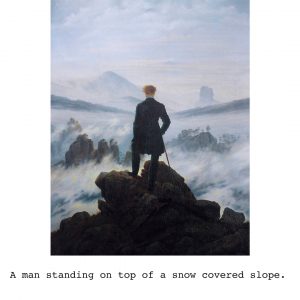

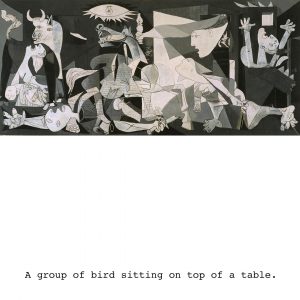

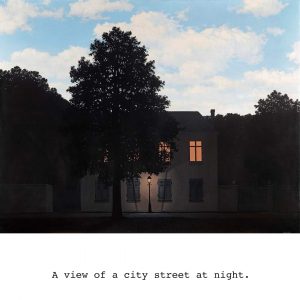

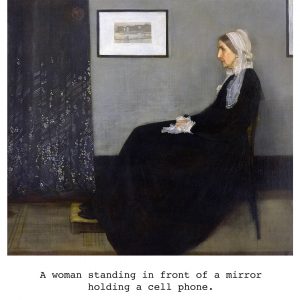

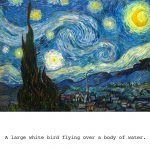

How do algorithms see the world? How a computer vision algorithm experiences masterpieces? How do the artworks inform today’s world? WYSIWYG explores how machines are making sense of the world. Disruptive technologies reshape our paradigms: machine learning is becoming a design material. WYSIWYG investigates new tools that try to extend the viewer’s field of vision. Through the lens of an object detection algorithm, the model generates complete sentences from an input image: the result is computed automatically. It creates unconventional connections between the input and the output. The description highlights a shift between the representation of the work and its subject, and what is perceived by the machine. WYSIWYG uses the ‘im2txt’ model developed by Oriol Vinyals, Alexander Toshev, Samy Bengio, Dumitru Erhan in 2015, and published on the RunwayML platform. This model is at the intersection of deep learning, computer vision and natural languages.

Using new media, Béatrice Lartigue creates installations which explore our perceptions of space and sound. These pieces, in which the visitor becomes an actor, provoke a loss of bearings and offer a sensitive interpretation of impalpable phenomena by giving them a materiality, as through light and sound beams in space (Passifolia, 2020), the force of the wind on the landscape (Nebula, 2018), or the unfold of a music score in volume (Portée/, 2014). Her works were exhibited at the Barbican Centre (London, GBR), the Miraikan Museum (Tokyo, JPN), the Museum of Digital Art (Zurich, CHE), the DMuseum (Seoul, KOR), le Centre Pompidou (Paris, FRA). Béatrice Lartigue has won several international awards, including from the Sundance Film Festival (New Frontier Selection: Notes on Blindness), the Lumen Prize (Performance Award: Portée/). Béatrice teaches Media and Interaction Design at Gobelins (Paris, FRA) and regularly leads workshops in institutions such as: ECAL (Lausanne, CHE), Estienne (Paris, FRA)...